XOROMANCY

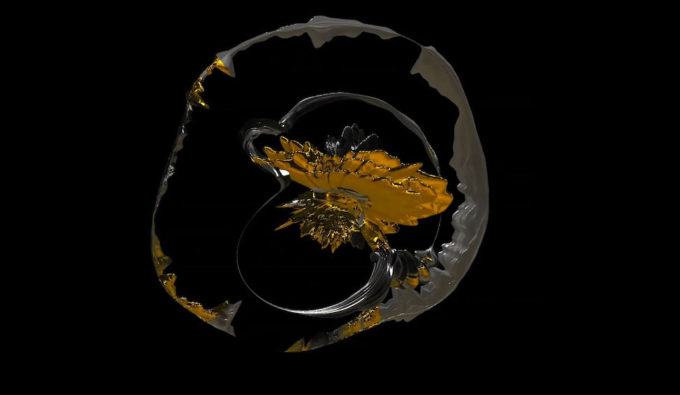

Aman Tawari and Gray Crawford (2019)Xoromancy, created collaboratively by Aman Tawari and Gray Crawford, is an exploration of the gestural control of neural networks. Xoromancy explores the near-infinite space of psuedoreal images generated by a neural network trained on millions of images. Participants move their hands to shift the influence and mixture of texture, color, and subject, training themselves in the ways of Xoromancy.

Xoromancy explores methods for gestural control of high dimensional spaces, applied to the control of generative adversarial networks (GANs). Generative adversarial networks present incredible possibilities for generating novel images, however, it is hard to directly and flexibly control them because of their high-dimensional input spaces. Xoromancy’s intervention is to use our own body as a control system because it is a high-dimensional control system we already use to play with neural networks. In the current incarnation, Xoromancy uses the position and rotation of your hands to generate the input that will be fed to the neural network.

Xoromancy’s motion based user interface sets it apart. Correlation between proprioception and visual feedback builds an intuitive understanding for navigating the highly-nonlinear mappings between input dimensions and generated output imagery. Many other tools for exploring the image latent space of generative adversarial networks provide only stepwise, image-by-image traversal. Xoromancy’s use of hand tracking for controlling many latent space vectors simultaneously with real time image response enables rapid and fluent exploration.

It is the first interactive real-time tool leveraging the human body’s proprioception and fine motor skills to control the generation of images by neural networks. These images, produced by bigGAN (Brock et al, 2018) are high-resolution and can often photorealistically represent objects and scenes that would otherwise be prohibitively time-consuming to produce. The aesthetic draw of Xoromoancy is that these bigGAN images range from the highly realistic to the distinctly surreal. The outputs often contain perceptual cues to realistic lighting, texture, and forms but with decidedly unreal subjects.

Aman Tiwari worked as the primary software engineer, using Unity and Python with Tensorflow to develop Xoromancy. Tiwaria and Crawford used a leap motion sensor for hand-tracking. The following video presents the visual effects of Xoromancy and parallel movements between hands and the visual presentation.

Xoromancy: Image Creation via Gestural Control of High-Dimensional Spaces from Gray Crawford on Vimeo.

The input spaces bigGAN and similar networks are extremely high-dimensional and are prohibitively difficult to explore and conceptualize. This makes exhaustive exploration intractable and random exploration slow and often unrewarding.

Developers recognize that the human body is itself a continuous and high-dimensional control system, and modern body tracking such as the Leap Motion sensor enables the body’s motion to drive the high-dimensional input vector controlling the GAN’s visual output.

Although the possible dimensions of bodily movement are many, only a subset are mechanically comfortable to control and conceptually intuitive. The input mappings chosen for Xoromancy support both fast exploration through a variety of images and specific refinement towards a goal once an image has been found. Xoromancy includes distinct gesture categories to independently control the subject of the generated image and its specific composition and form.