Volumetric Performance for Digital Theater

Sean Byrum Leo (2021)Volumetric Performance for Digital Theater by Sean Leo :: Supported by FRFAF (#2021-026) from STUDIO for Creative Inquiry on Vimeo.

Sean Byrum Leo, an MFA student in the Video & Media Design program of the CMU School of Drama, developed his graduate thesis project with support from the Frank-Ratchye STUDIO for Creative Inquiry, Microgrant #2021-026.

As theatrical projects faced the effects of the Covid-19 Pandemic, productions were indefinitely postponed, cancelled outright, and downsized to public readings hosted on popular video conferencing platforms; some projects translated and/or entirely produced digitally online using those same platforms.

As a Media Designer I spent much of the year during pandemic developing tools and workflows to present digital productions, using a mix of commercial, open source and custom tools, such as Zoom, NDI, OBS, VDO Ninja and TouchDesigner. Many of these solutions and workflows were cobbled together as a reaction to the limitations facing digital productions, rather than actively developing for what digital productions and theater could be.

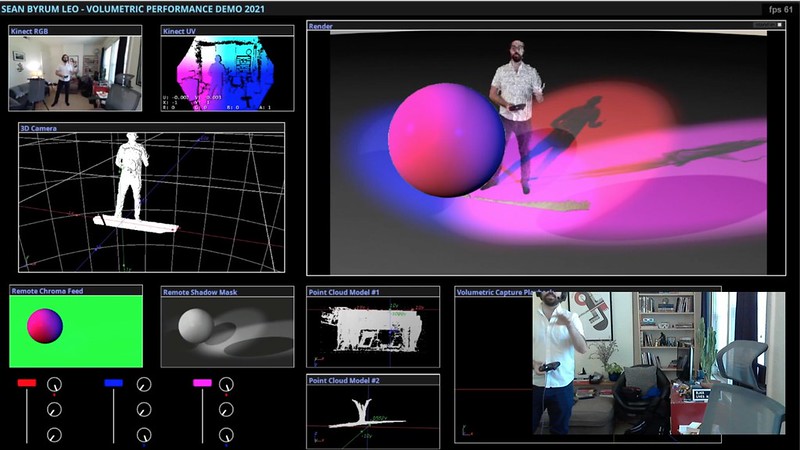

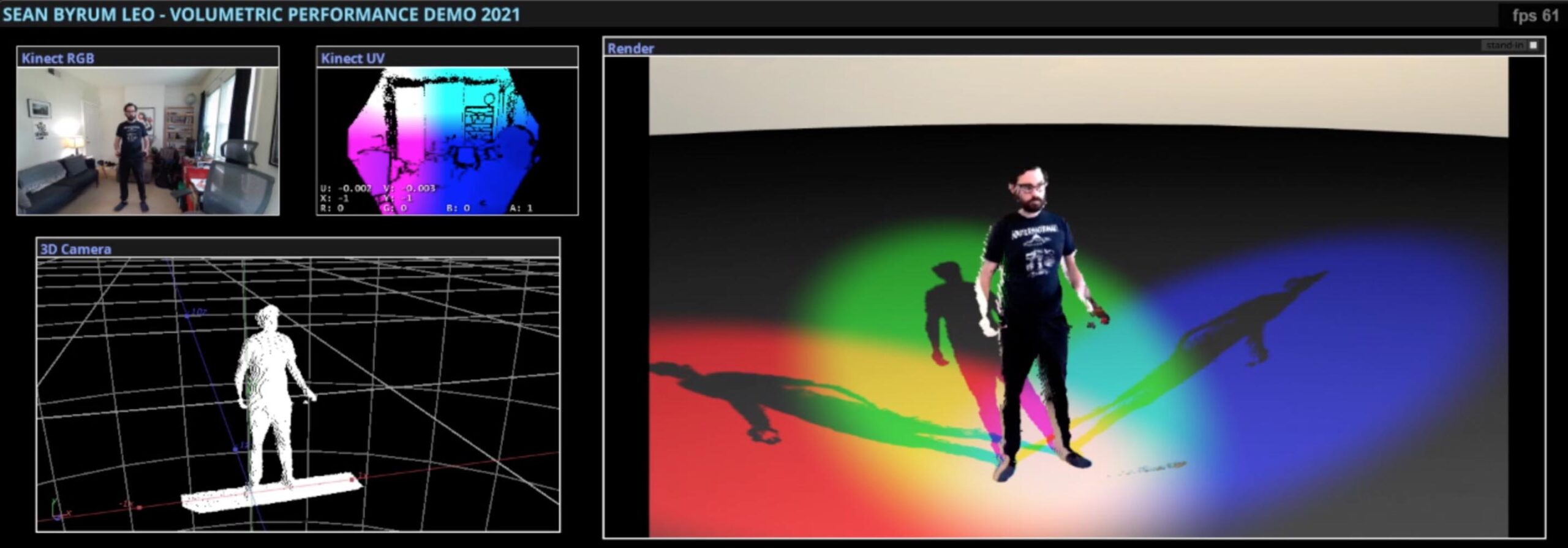

For my thesis I pursued the development of a creative methodology and technical workflow for real-time volumetric performances by digitizing and compositing remote performers together in the same virtual environment. This allows a sense of shared space and digital presence that is not offered from the standard static boxed perspective of video conferencing, and instead offers dynamic uses of media tools and techniques to produce a digital performance aesthetic that is real and live.

By using the Microsoft Kinect (Kinect V2, or Azure Kinect), and TouchDesigner, I was able to generate a real-time point cloud model of a subject that can be placed in the 3D digital environment. A benefit of point clouds and volumetric capture is it’s basis in photogrammetry. Rather than a shiny modeled avatar or 3D puppet, the RGB data collected from the camera and sensor allow the model to represented photographically. It is clear the model is digitized, but it is also clear that the model looks like the subject without descending too far into the uncanny valley.

Another benefit of this process is the created environment and model are viewed through a virtual camera that can be positioned and dynamically moved in real-time, allowing operators to execute live switching between positions in space or complex camera moves through the control of a MIDI pad or Joystick/Game Controller (in my project I used a wireless Xbox Game controller) mapped to the transform properties of the virtual camera. The result is like watching a digital live cinematic production. The environment and lighting can also be driven dynamically through similar interactions with hardware interfaces, allowing for impossible scenic changes and digital effects. Further extrapolating this idea could lead to performers themselves outfitted with sensors, or even their phones, to affect and control various aspects of the digital world their virtual model occupies, allowing the performers to creatively respond and collaborate with the media.

To integrate remote performers into the same scene, would require a mirror of the operators’ system. The operator (Host) would control the camera, lighting, and scenic elements which would be updated on the performers’ (Node) end through establishing a remote OSC connection via ZeroTier (a VPN solution). The differences between the node systems would be the world space position of each model, which helps the illusion of parallax when composited. As lighting is updated on the host’s end, lighting is updated on the node’s end allowing shadows to generated as if all coming from the same source when composited together. Each node process then streams a multi-view to the host to be composited into the full scene.

This process hinges on the computational power and process of the individual, which at the moment is cost prohibitive (given the expense of a Kinect sensor, computer and the graphics required to render, I ran my project on a RTX 3080). But as the world moves past the restrictions of Covid-19, and we are allowed to share spaces again, less computing resources would be needed. We can also see a more streamlined implementation of this process as telematic technology becomes faster/more efficient, and depth sensors become more ubiquitous, this digital aesthetic will be utilized more and more.

I believe volumetric performance and real-time processes can offer new opportunities for Digital Theater to experiment with new performance aesthetics and generate creative ways to tell engaging stories.

This project was supported in part by microgrant#2021-026 from the Frank-Ratchye Fund for Art @ the Frontier.