Tactum: Skin-Centric Design

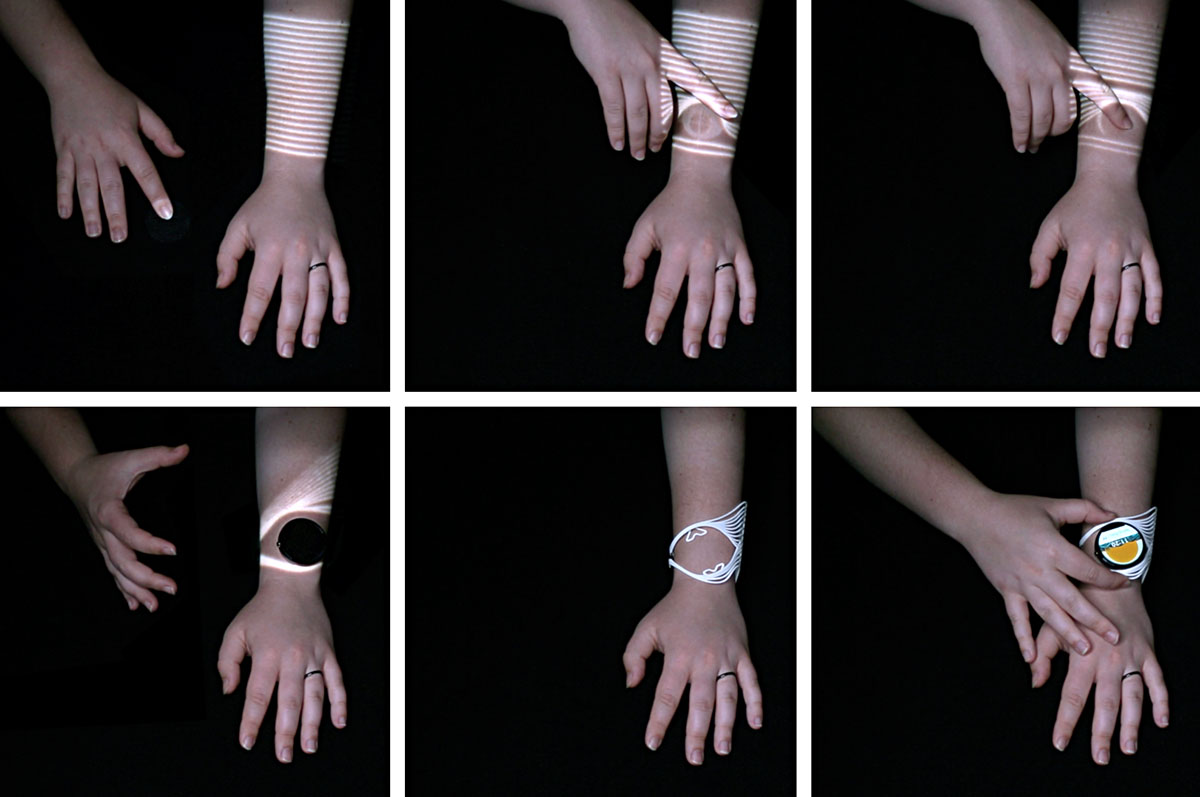

Madeline Gannon (2015)Tactum is an augmented modeling tool that lets you design 3D printed wearables directly on your body. It uses depth sensing and projection mapping to detect and display touch gestures on the skin. A person can simply touch, poke, rub, or pinch the geometry projected onto their arm to customize ready-to-print, ready-to-wear forms. Tactum was developed by Architecture doctoral student, Madeline Gannon, in collaboration with members of the User Interface Group at Autodesk Research.

Design for the body, on the body

Tactum extracts features from the user’s body to generate the interactive digital geometry that is projected onto the skin. This embeds a level of ergonomic intelligence into the form: wearable designs are inherently sized to fit the designer. Tactum also embeds 3D printing fabrication constraints into the geometry. No matter how the designer manipulates the projected forms, every design is always ready to be 3D printed and worn back on the body.

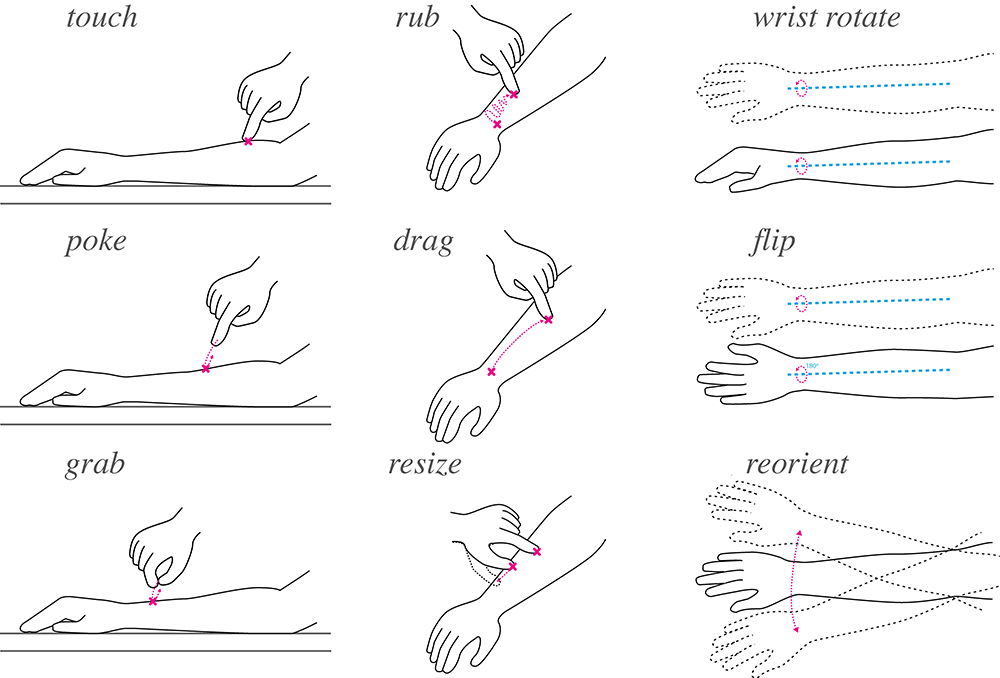

Intuitive gesture, precise geometry

Gestures within Tactum are designed to be as natural as possible: as you touch, poke, or pinch your skin, the projected geometry responds as dynamic feedback. Although these gestures are intuitive and expressive, they are also fairly imprecise. Their minimum tolerance is around 20mm (the approximate size of a fingertip). While this is adequate when designing some wearables, like in the example above, it is inadequate for designing wearables around precise, existing object.

In this example, we use Tactum to create a new watch band for the Moto 360 Smartwatch. Skin gestures enable the designer to set the position and orientation of the watch face on the wrist and to craft the overall form. However, there are a number of hard constraints that need to be extremely precise in order for the watch face to fit, and the new band to function. For example, the clips to hold the watch face and the clasp to close the band onto the arm have exact measurements and tolerances required for both fit and fabrication.

Tactum uses intelligent geometry to strike a balance between intuitive gesture and precise constraints. Here, the exact geometries for the clips and clasp of the smartwatch are topologically defined within the band’s parametric model. In this example, the CAD backend (below) wraps and pipes the projected geometry around an existing 3D scan of the forearm. The clips and clasp, while dependant on the overall watch band geometry, cannot be directly modified by any gestures. The CAD backend places and generates those precise geometries, once the user has finalized a design.

Pre-scanning the body is not entirely necessary when designing a wearable in Tactum. However, it ensures an exact fit once the printed form is placed back on the body. Between the 3D scan, the intelligent geometry, and intuitive interactions, Tactum is able to coordinate imprecise skin-based gestures to create very precise designs around very precise forms.

Physical Artifacts

Tactum has been used to create a series of physical artifacts around the forearm. These test different kinds of interactive geometries, materials, modeling modes, and fabrication machines. Below shows PLA print made from a standard desktop 3D printer, a nylon and rubber print made from a Selective-Laser Sinter (SLS) 3D printer, and rubbery print made from a Stereolithography (SLA) 3D printer.

Implementation details

Tactum has been explored through 2 research prototypes. The first prototype used an above-mounted Microsoft Kinect to detect and track skin gestures. It used a Microsoft Surface Pro 3 as an auxiliary display for showing digital geometry to the designer. Our second prototype switched to an above-mounted Leap Motion Controller for skin gestures. While this sensor provided more robust hand and arm tracking, the gesture detection was more robust with the Kinect. The second prototype also switched from an auxiliary to mapping and projecting geometry directly onto the body.

Acknowledgments and Additional Information

For full information about this project, please see the Tactum technical paper on skin-centric design. High resolution images of Tactum can be found here. Tactum was developed in collaboration with the User Interface Group at Autodesk Research, and was supported in part by the Frank-Ratchye STUDIO for Creative Inquiry at Carnegie Mellon University.

Tactum has been presented at SXSW Interactive 2015, and at CHI 2015, where it received an Honorable Mention for Best Paper. In the popular press, Tactum has been featured on NOTCOT, Wired, Motherboard, The Creators Project, Gizmodo, Dezeen, Fast Company, 3dprint.com, 3Ders.org, 3D Printing Industry, and Blog.LeapMotion.com.

Photography: Jake Marsico

Videography: Sankalp Bhatnagar

Documentation: Themis Cádiz, Epic Jefferson, Julián Sandoval

This project was made possible with support from the Frank-Ratchye Further Fund Microgrant #2016-008.