Mimus

Madeline Gannon (2017)The Mimus project was developed by Madeline Gannon as a project of her research studio ATONATON.

Mimus is a giant industrial robot that’s curious about the world around her. Unlike in traditional industrial robotics, Mimus has no pre-planned movements: she is programmed with the freedom to explore and roam about her enclosure. Mimus uses an array of depth sensors embedded into the ceiling to sense and respond to visitors. If she finds someone interesting, Mimus may come in for a closer look and follow them around for a bit. However, her attention span is limited: stay still for too long and she’ll try to get your attention … but eventually she will get bored and go find someone else to go investigate.

ROBOTS ARE CREATURES, NOT THINGS

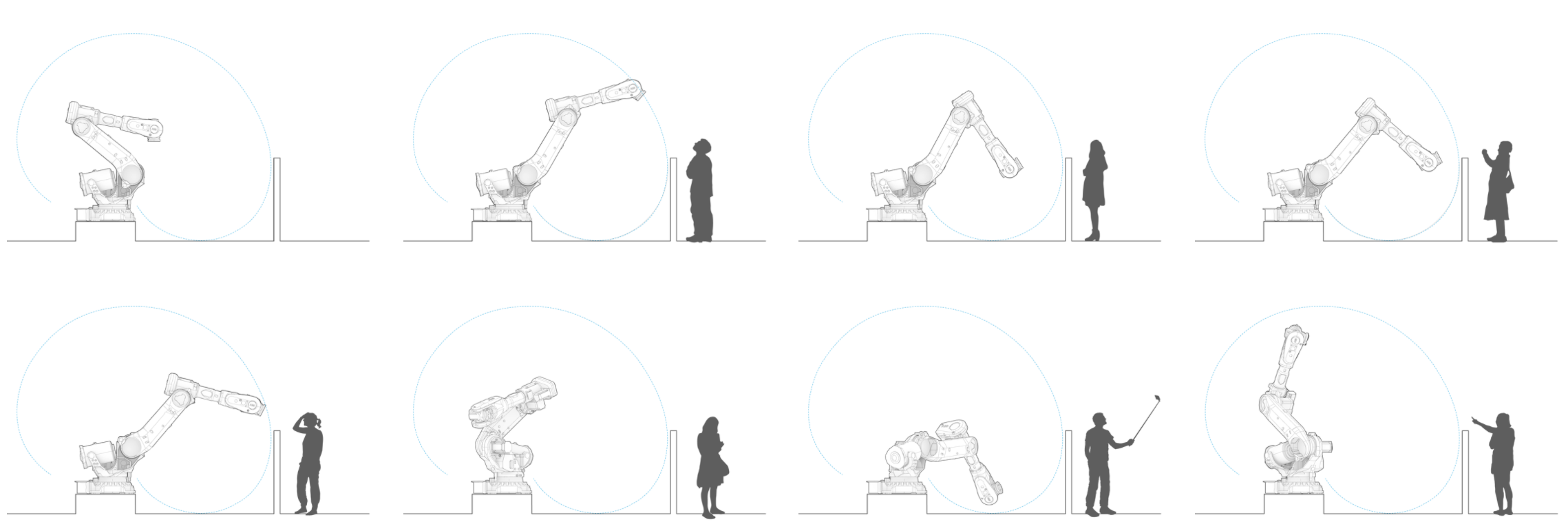

Every aspect of Mimus — from the interaction design to her physical environment — is designed for visitors to forget they are looking at a machine, and instead see her as a living creature. This allows the robot’s body language and posturing to broadcast a spectrum of emotional states to visitors: when Mimus’ sees you from far away, she looks down at you using a fairly intimidating pose, like a bear standing on their hind legs; when you walk closer to her, Mimus approaches you from below, like a puppy that is excited to see you.

When something responds to us with lifelike movements — even when it is clearly an inanimate object — we cannot help but project our emotions onto it. This is just human nature.

For Mimus, her body language acts as a medium for cultivating empathy between museum goers and a piece of industrial machinery. This primitive, yet fluid, means of communication equips visitors with an innate understanding of the behaviors, kinematics, and limitations of a robot. Mimus’ movements may not always be predictable, but they are always comprehensible to the people around her.

FROM DOMINANCE TO CO-EXISTENCE

ATONATON’s current model for robotics and automation primarily consist of systems for optimization and control: they tell the robots what to do, and the robots do it to maximum effectiveness. This human-robot relationship has served us very well, and over the past 50 years robotic automation has led to unprecedented innovation and productivity in agriculture, medicine, and manufacturing.

However, we are reaching an inflection point. Rapid advancements in machine learning and artificial intelligence are making robotic systems smarter and more adaptable than ever, but these advancements also inherently weaken our direct control and relevance to autonomous machines. Similarly, robotic manufacturing, despite its benefits, is arriving at a great human cost: the World Economic Forum estimates that over the next four years, rapid growth of robotics in global manufacturing will place the livelihoods of 5 million people at stake. What should be clear by now is that the robots are here to stay. So rather than continue down the path of optimizing our own obsolescence, now is the time to rethink how humans and robots are going to co-exist on this planet.

As we go from operating to cohabitating with robots, one of the biggest challenges we face is in communicating with these machines. Take for example, autonomous vehicles. Currently, there is no way for a pedestrian to read the intentions of a driverless car, and this lack of legibility can lead to disastrous results. And as non-humanoid, autonomous robots become increasingly prevalent in daily lives — like drones, cars, trucks, and co-workers — there will be a need for more effective ways of communicating with these machines.

IMPLEMENTATION DETAILS

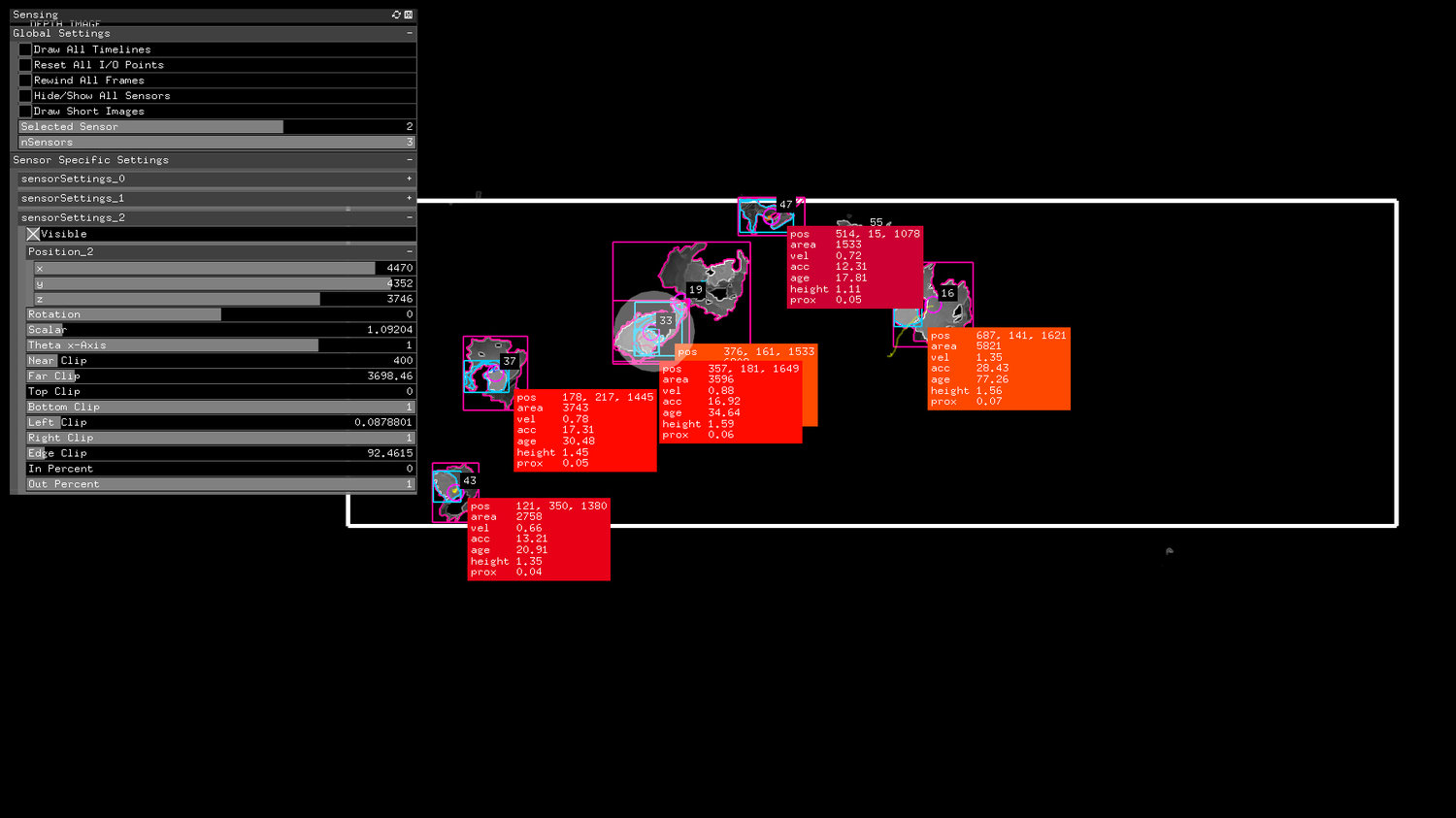

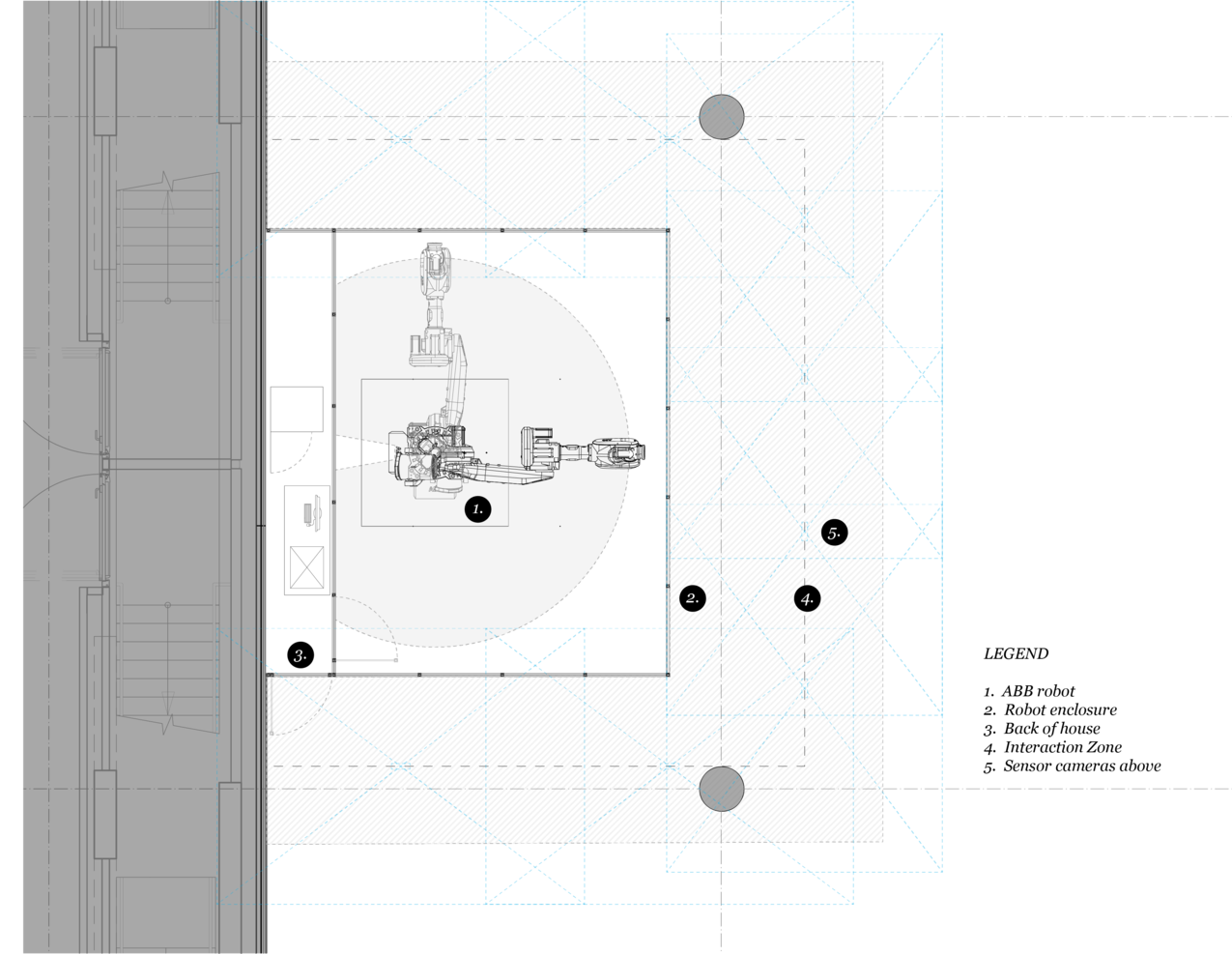

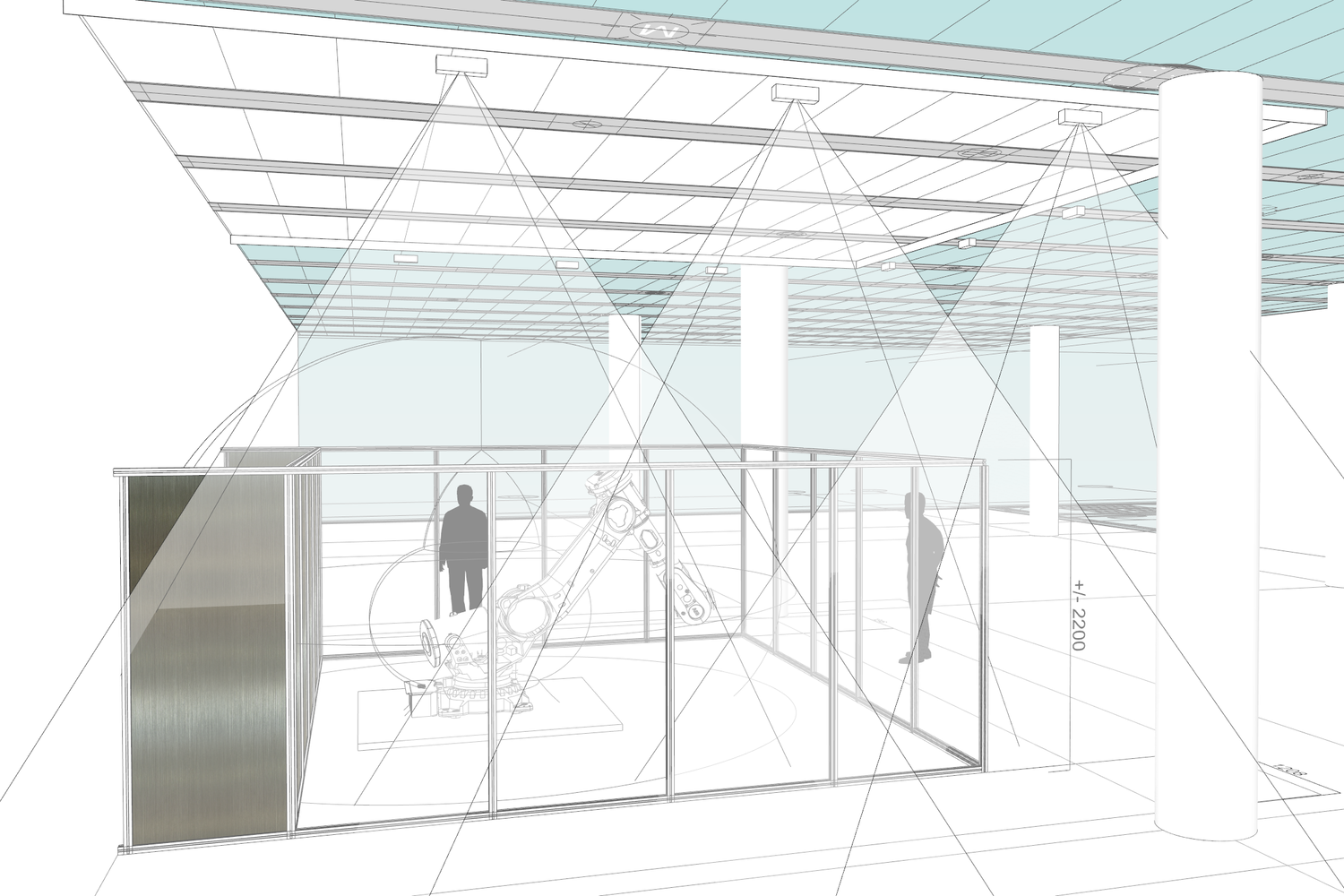

Mimus uses three layers of custom built software to transform an ABB IRB 6700 industrial robot into a living, breathing mechanical creature. The first layer handles all the data streaming from the eight depth sensors embedded in the ceiling. Our software stitches together depth data from each individual sensor to form a single point-cloud of the perimeter around the robot enclosure. This unified point cloud provides the 3D information needed to do low-latency people tracking and basic gesture detection. This sensor array has an effective tracking area of approximately 45m², tracking from 500 millimeters to 2.2 meters (around 18” to 7’) in height. This portion of the codebase was developed in openframeworks – a C++-based open source arts-engineering coding toolkit.

Each detected person is tracked and assigned attributes as they move around the space. Some attributes are explicit — like position, age, proximity, height, and area — and other attributes are implicit — like activity level and engagement level. Mimus uses these attributes to find the “most interesting person” in her view. ATONATON’s software dynamically weight these attributes so that, for example, on one day Mimus may favor people with lower heights (e.g., kids) and on another day, Mimus may favor people who have the greatest age (i.e., people who have been at the installation the longest). Once a person grabs Mimus’s attention, they have to work to keep it: once they are no longer the most interesting person, Mimus will get bored and go find someone else to investigate.

The second software layer runs directly on the robot’s onboard computer. Written in RAPID, the programming language used to control ABB industrial robots, this program simply listens for specific movement commands being sent by a PC. Once a command is received and parsed, the robot can physically move to position it was given. The final software layer acts as a bridge between our sensing software and the robot. It has hard limits and checks to ensure that the robot can’t run into potentially damaging position, but otherwise Mimus is free to roam within the limits that are set for her.

PHYSICAL DESIGN

Madeline approached the physical design of the installation as if it were bringing a wild animal into a museum gallery. And as is the case for zoos and menageries, the design of the installation is two-fold: the staging and enclosure for the creature, and its interactions with visitors. For the physical design of the installation, the challenge was to integrate the necessary safety and sensing infrastructure in a way that still facilitated awe, wonder, and spectacle when visitors interact with her robot.

Project Credits

Commissioned by The Design Museum, June 2016

Development Team: Madeline Gannon, Julián Sandoval, Kevyn McPhail, Ben Snell

Sponsors: Autodesk, Inc., ABB Ltd., The Frank-Ratchye Studio for Creative Inquiry

Press about Mimus

- Clark, Liat. “The robot whisperer who tames giant industrial machine ‘monsters’ to do her bidding“. Wired UK, 11/5/2016. [PDF]

- Dowd, Vincent. “Design Museum: A glossy new era and home“. BBC News, 11/24/2016. [PDF]

- James, Andrea. “Madeline Gannon’s Mimus examines robot-human interdependence“. Boing Boing, 11/25/2016. [PDF]

- Mannix, Kim. “Industrial Robot Meets Artificial Intelligence to Create Art“. ENGINEERING.com, 1/24/2017. [PDF]

- Neumann, Detlev. “Autodesk macht Industrieroboter “Mimus” (fast) zum Schoßhund“. mittelstand DIE MACHER, 1/12/2017. [PDF]

- Pangburn, DJ. “Industrial Robot Reprogrammed to Get Bored and Curious Like a Living Thing“. The Creators Project, 1/5/2017. [PDF]

- Sayej, Nadja. “This One Ton Robot Was Created to Ease Your Fears of a Robot Takeover“. Motherboard, 11/15/2016. [PDF]